AutoscalingPolicy

AutoscalingPolicy defines which Workloads should have their Requests and Limits automatically adjusted, when these adjustments should occur, and how they should be applied. By properly configuring a AutoscalingPolicy, you can continuously adjust the Requests of a group of Workloads to a reasonable and efficient level.

Note: If a Pod contains sidecar containers (e.g., Istio), we won’t modify them, and they will be excluded from recommendation calculations. We detect sidecars by diffing the container names between the workload’s Pod template and the actual Pod; any names that exist only in the Pod are treated as injected sidecars.

Enable*

Enable toggles whether an AutoscalingPolicy is active (enabled by default).

When set to Disabled, the policy is removed from the cluster and retained only on the server. This operation effectively deletes the AutoscalingPolicy and will trigger the On Policy Removal actions.

Priority

When multiple AutoscalingPolicy match the same Workload, Priority determines which policy takes precedence.

- A AutoscalingPolicy with a higher Priority value will be applied first.

- If two AutoscalingPolicies have the same Priority, the one created earlier will be applied.

The Priority field allows you to flexibly apply AutoscalingPolicies across your workloads.

For example:

- You can configure a AutoscalingPolicy with Priority = 0 that matches most or even all Workloads but does not actively adjust them.

- Then, you can define additional AutoscalingPolicies with higher Priority values to target specific Workloads with more aggressive adjustment strategies.

Recommendation Policy Name*

The RecommendationPolicyName specifies which Recommendation Policy the AutoscalingPolicy should use to calculate recommendations. It defines both the calculation method and the applicable scope.

For details, see Recommendation Policy.

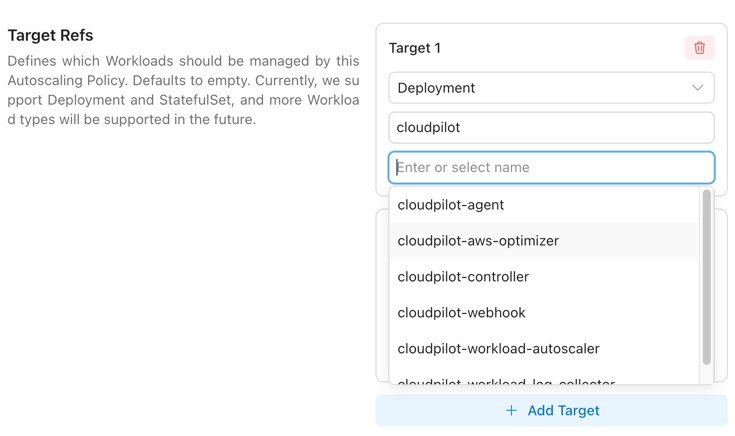

TargetRefs

TargetRefs specify the scope of Workloads to which the AutoscalingPolicy applies. You can configure multiple TargetRefs to cover a broader set of Workloads.

| Field | Allowed Values | Required | Description |

|---|---|---|---|

| Kind | Deployment | StatefulSet | Yes | Type of Workload. Currently supports Deployment and StatefulSet. |

| Name | Any valid Workload name | empty | No | Name of the Workload. If left empty, it matches all Workloads within the namespace or cluster (depending on Namespace). |

| Namespace | Any valid namespace | empty | No | Namespace of the Workload. If left empty, it matches all namespaces in the cluster. |

Name and Namespace support shell-style glob patterns (*, ?, and character classes like [a-z]); patterns match the entire value, and an empty field (or *) matches all.

| Pattern | Meaning | Matches | Doesn’t match |

|---|---|---|---|

* | Any value | web, ns-1, default | — |

web-* | Values starting with web- | web-1, web-prod-a | api-web-1 |

*-prod | Values ending with -prod | core-prod, a-prod | prod-core |

front? | front + exactly 1 char | front1, fronta | front10, front |

job-?? | job- + exactly 2 chars | job-01, job-ab | job-1, job-001 |

ns-[0-9][0-9]-* | ns- + two digits + - + anything | ns-01-a, ns-99-x | ns-1-a |

db[0-2] | db0, db1, or db2 only | db0, db2 | db3, db-2 |

[^0-9]* | Does not start with a digit | app1, ns-x | 9-app |

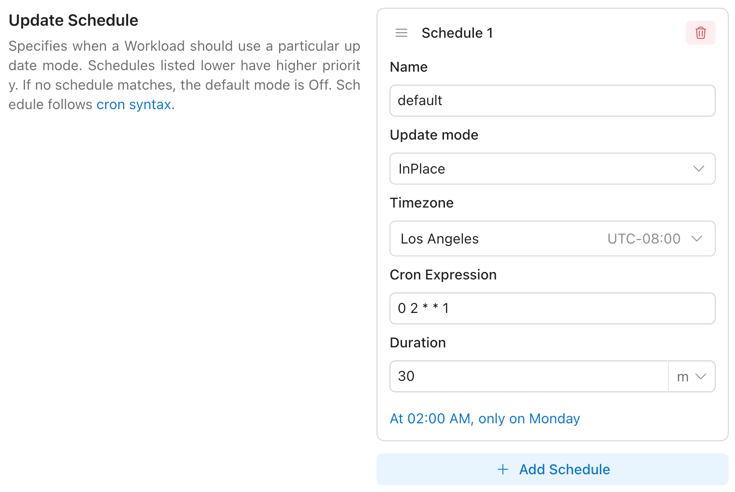

Update Schedule

UpdateSchedule defines when a Workload should use a particular update mode.

- Items listed later in the schedule have higher priority.

- If no schedule matches, the default mode is

Off.

By configuring multiple Update Schedule Items, you can apply different update modes at different times. For example:

- During the day, only allow updates using the

OnCreatemode. - At night, allow updates using the

ReCreatemode.

Update Schedule Item

| Field | Allowed Values / Format | Description |

|---|---|---|

Name | Any non-empty string | Human-readable name of this schedule item. |

Cron Expression | Cron expression (e.g., 0 2 * * *) | Start time defined by a cron schedule. Must follow standard cron syntax. Must be provided together with Duration. |

Duration | Go/K8s duration string (e.g., 30m, 1h) | Length of time the Update Mode remains active after Cron Expression triggers. Must be provided together with Cron Expression. |

Update Mode | OnCreate | ReCreate | InPlace | Off | Update behavior to apply when this item is active. |

Timezone | IANA Time Zone name | Time zone for interpreting the Cron Expression field. Defaults to UTC if not specified. |

Constraint: Cron Expression and Duration are jointly optional — either both present (time-windowed rule) or both absent (always applicable rule with the specified mode).

You can visit here to refer to how the Cron syntax of the Cron Expression field works.

UpdateMode

| Value | Behavior | Typical Use Case |

|---|---|---|

OnCreate | Apply changes only when new Pods are created. | Safe, no disruption to existing Pods. |

ReCreate | Apply changes by recreating Pods (rolling replace). | When in-place vertical changes are not supported or a clean restart is desired. |

InPlace | Apply changes in place to running Pods (no recreate). | Minimal disruption on clusters that support in-place resource updates. |

Off | Do not apply changes (disabled). | Temporarily pause updates. |

When the UpdateMode is set to either ReCreate or InPlace, the OnCreate mode will also be applied automatically. This ensures that when a Pod restarts normally, the newly created Pod will always receive the latest recommendations, regardless of the Drift Thresholds.

For ReCreate operations, when attempting to evict a single-replica Deployment without PVCs, we perform a rolling update to avoid service interruption during the update.

Note: The

InPlacemode has certain limitations and may automatically fall back toReCreatein some cases. For details, see InPlace Limitations.

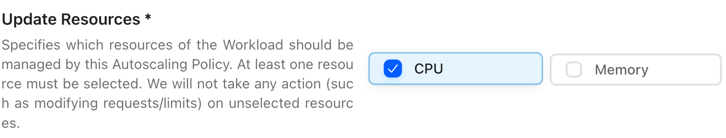

Update Resources*

UpdateResources defines which resources should be managed by the Workload Autoscaler.

Available resources: CPU / Memory.

- You must select at least one resource.

- Only the selected resources will be actively updated.

- This setting does not affect how recommendations are calculated.

If you don’t have specific requirements or if you already use HPA, we recommend allowing both CPU and Memory to be managed.

Note: When you modify the

Update Resources, an update operation may be triggered based on the deviation between the recommended value and the current value. This operation will take effect immediately once the conditions of theUpdate Scheduleare met.

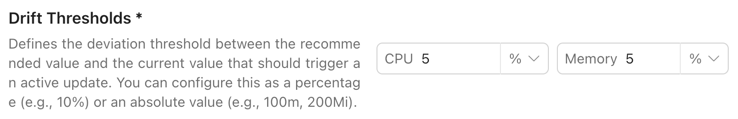

Drift Thresholds

DriftThresholds define the deviation between the recommended value and the current value that should trigger an active update.

- You can configure this as either a percentage or an absolute value.

- The default is 10%.

| Resource Type | Percentage | Absolute Value (Option 1) | Absolute Value (Option 2) |

|---|---|---|---|

| CPU | 20% | 0.5 | 200m |

| Memory | 10% | 0.25Gi | 500Mi |

If the deviation for any resource in a Pod exceeds the threshold, the Pod will be actively updated.

On Policy Removal

OnPolicyRemoval defines how Pods are rolled back when a AutoscalingPolicy is removed.

- The default is

Off: the configuration will be deleted, but no action will be taken on existing Pods.

| Value | Behavior on policy removal | Business impact | Recommended scenarios | Notes |

|---|---|---|---|---|

Off | Delete the EVPAC configuration only; do not roll back Pod requests (status quo). | No restart, zero downtime | When rollback is not required; keep current resource settings after troubleshooting. | Limits are unchanged. This option makes no changes to requests/limits. |

ReCreate | Roll back to the pre-policy requests by recreating target Workloads (rolling replace). | Restarts, brief downtime | Cluster does not support in-place vertical changes; require scheduler to reassign resources. | Ensure safe rolling strategy. Limits typically remain unchanged unless your controller handles them. |

InPlace | Roll back to the pre-policy requests via in-place Pod updates (no recreate). | Usually zero/low disruption | Cluster supports in-place vertical resizing; prioritize minimal disturbance. | Requires cluster/runtime support for in-place updates. Limits unchanged unless otherwise implemented. |

For ReCreate operations, when attempting to evict a single-replica Deployment without PVCs, we perform a rolling update to avoid service interruption during the update.

Note: The

InPlacemode has certain limitations and may automatically fall back toReCreatein some cases. For details, see InPlace Limitations. When unexpected situations prevent us from restoring the Pod Request for 10 minutes, we will allow the configuration to be deleted directly without restoring the Pod Request.

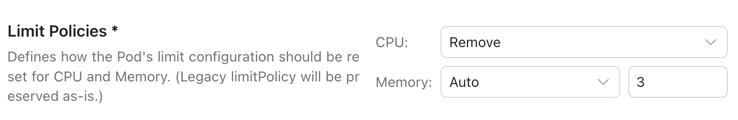

Limit Policies

Limit Policies let you control how CloudPilot AI manages CPU/Memory Limits for a workload.

In most cases, we recommend Remove so workloads (especially CPU resource) are not artificially capped and can burst when needed. Workload Autoscaler focuses on keeping Requests accurate; therefore, Limits configuration typically has limited impact on stability—unless you rely on Limits as a hard safety boundary.

Policy Behaviors

| Policy | Behavior |

|---|---|

Remove | Removes Pod limits (no CPU/Memory caps). |

Keep | Keeps existing Pod limits unchanged. |

Multiplier | Recalculates limits by multiplying the recommended Requests by a configured multiplier. |

Auto | Same as Multiplier, but only applies the update when the new Limits are higher than the current/original Limits, ensuring Limits never become lower than the default configuration. |

When changes take effect

Updating the Limit Policy may trigger a rollout/update. The decision depends on:

- how far the current values deviate from the recommended values, and

- whether existing Pods match the expected limits configuration.

Once the configured Update Schedule conditions are met, changes are applied immediately.

Operational guidance

When using Multiplier / Auto, we strongly recommend setting a reasonable lower bound for CPU/Memory recommendations. In rare cases (e.g., test environments with extremely low observed usage), recommendations may be too small to support stable startup or sudden traffic spikes.

Note: With

Keep, the final recommended values will never exceed your configured Limits. If you want Pods to burst beyond current caps when needed, considerRemoveorMultiplier.

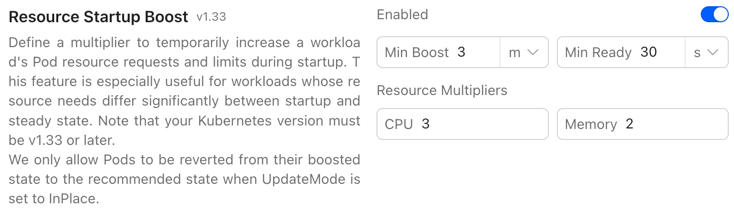

ResourceStartupBoost

ResourceStartupBoost allows you to apply a temporary resource boost during workload startup. This is designed for workloads that require significantly more resources at startup than at steady state (e.g., the Java startup spike).

Fields

| Field | Allowed Values / Format | Description |

|---|---|---|

Enabled | Bool | Enables or disables ResourceStartupBoost. |

Min Boost | Time Duration | Minimum duration that Startup Boost remains active per Pod. |

Min Ready | Time Duration | Minimum time a Pod must stay in Ready state before resources can be restored. |

CPU Resource Multiplier | [1, 5] | Multiplier applied to the recommended CPU Requests and Limits during Boost. |

Memory Resource Multiplier | [1, 5] | Multiplier applied to the recommended Memory Requests and Limits during Boost. |

Memory boost behavior (InPlace Update limitation)

Due to InPlace Update limitations, when boosting Memory, CloudPilot AI will increase Requests only, and will not increase Limits. After boosting, the boosted Requests value will never exceed the configured Limits.

Requirements

To use ResourceStartupBoost:

- Kubernetes version must be v1.33+

- Workload

UpdateModemust be set to InPlace at all times

Otherwise, CloudPilot AI will not restore the workload’s resources after startup.